Alibaba has officially unveiled its latest advancement in artificial intelligence with the release of the Alibaba Qwen3.5-397B-A17B model. Launched on February 16, 2026, this flagship open-weight AI model introduces a massive leap in agentic performance and multimodal capabilities. By combining a massive scale with highly efficient processing, the new system is set to challenge both open-source competitors and leading proprietary systems.

The Alibaba Qwen3.5-397B-A17B model represents a major breakthrough for the company’s Qwen research team, also known as Tongyi Lab. Designed to serve as a high-performance, cost-efficient foundation for the next generation of autonomous AI agents, it marks a significant evolution from previous iterations. The release fundamentally shifts the landscape of open-weight models by matching the capabilities of the most advanced closed models in the world.

Innovative Hybrid Architecture

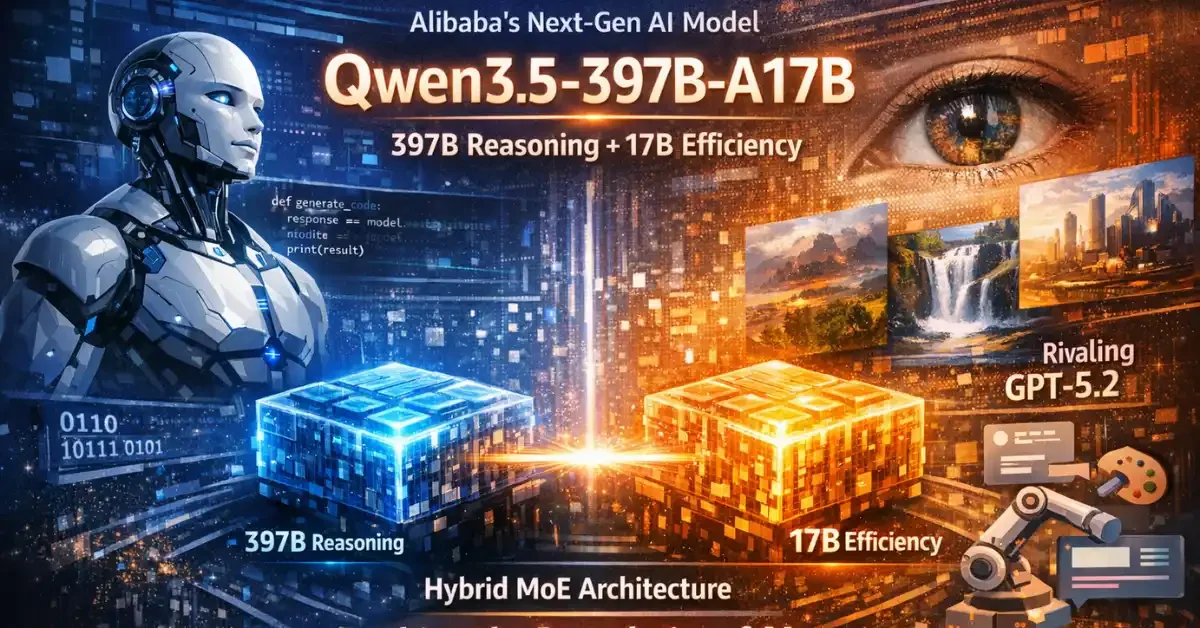

At the core of the Alibaba Qwen3.5-397B-A17B model is a highly specialized Transformer architecture. The system utilizes a sparse Mixture-of-Experts (MoE) framework combined with a unique Gated Delta Network hybrid design. This technical synergy allows the model to store a staggering 397 billion total parameters while remaining exceptionally lightweight during operation.

When processing information, the model activates only 17 billion parameters per individual token. This selective activation means users can achieve the complex reasoning and depth of a massive 400-billion parameter system, but with the rapid inference speed of a much smaller 17-billion parameter model. By bridging this gap, Alibaba has created a solution that maximizes both scale and operational efficiency without compromising on output quality.

Benchmark Performance and Global Rankings

In independent testing, the Alibaba Qwen3.5-397B-A17B model has proven to be a formidable contender in the global AI race. According to the Artificial Analysis Intelligence Index, the model achieved a score of 45. This places it as the number three open-weight model in the world, positioned just behind GLM-5, which scored 50, and Kimi K2.5, which scored 47.

This score represents a massive 16-point intelligence gain over Alibaba’s previous open-weight release, the Qwen3 235B. Analysts attribute this significant jump primarily to the model’s vastly improved agentic performance. Furthermore, reports indicate that the new system even outperforms Alibaba’s own larger, trillion-parameter model in certain benchmark tasks.

When measured against proprietary systems, the model demonstrates performance on par with some of the industry’s biggest names. Benchmark charts reveal that it rivals or outperforms closed models like GPT-5.2, Claude Opus 4.5, and Gemini 3 Pro across various metrics, particularly excelling in the complex thinking and reasoning domains.

Advanced Multimodal and Coding Capabilities

The new release is not just a text-based tool; it is a native multimodal foundation model. Built with early fusion vision-language training, the system integrates the functions of large-scale language models seamlessly with visual comprehension. This allows for outstanding image and video understanding directly out of the box.

These multimodal features translate into exceptional coding and agent performance. Demonstrations of the model’s capabilities show it independently coding high-quality websites and even developing 3D racing games based on user prompts.

In a major shift from previous generations, Alibaba has also consolidated its model variants. While the Qwen3 family required users to choose between separate instruct and thinking versions, the Alibaba Qwen3.5-397B-A17B model supports both reasoning and non-reasoning modes within a single, unified system.

Unprecedented Speed and Token Efficiency

Efficiency is one of the most prominent features of this new release. The model boasts an 8.6x to 19.0x increase in decoding throughput compared to its predecessors. Specifically, it can process 32K tokens 8.6 times faster than the Qwen3-Max, and it handles larger 256K token workloads an astonishing 19 times faster.

The standard version features a robust 262K token context window, which is equivalent to the capacity of the earlier Qwen3 235B. For developers requiring even more capacity, a Plus version of the model expands this context window to support up to 1 million tokens.

In addition to pure speed, the model is highly token-efficient. During intelligence benchmarking, it utilized approximately 86 million output tokens. While this is slightly more than its predecessor, it is noticeably more efficient than competing models like Kimi K2.5 and GLM-5, which required 89 million and 110 million tokens, respectively.

Open-Source Availability for Developers

True to its commitment to the open-source community, Alibaba has released the model weights and configuration files under the permissive Apache 2.0 license. This provides developers and data scientists around the world with open access to top-tier AI technology.

The model is currently available for download and implementation across several major platforms, including Hugging Face and the NVIDIA NIM APIs. Additionally, developers looking for a more managed experience can access the model, including the extended 1-million token Plus version, directly through the Alibaba Cloud Model Studio API.