OpenAI has partnered with AI chipmaker Cerebras to add 750 megawatts of ultra low-latency AI compute to OpenAI’s platform, with the goal of speeding up how quickly its AI responds to users. The companies say the added capacity will be integrated into OpenAI’s inference stack in phases and will come online in multiple tranches through 2028.

In a separate announcement, Cerebras said the deal is a multi-year agreement to deploy 750 megawatts of Cerebras wafer-scale systems to serve OpenAI customers, rolling out in multiple stages starting in 2026. Cerebras described the deployment as the largest high-speed AI inference rollout in the world.

What the partnership aims to do

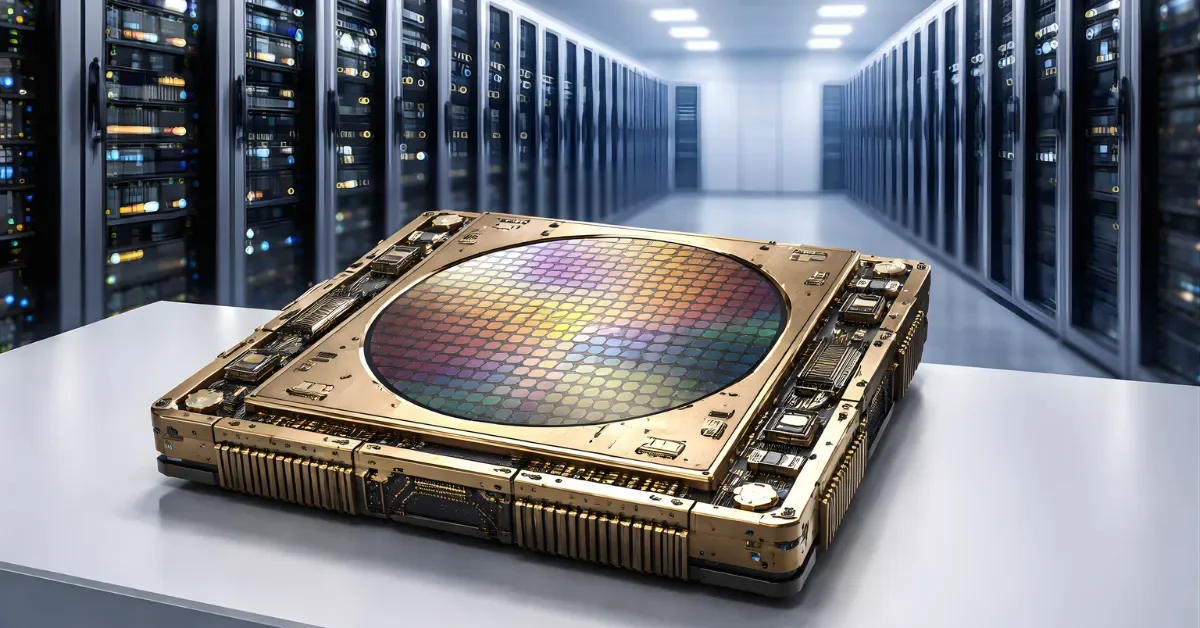

OpenAI said Cerebras systems are designed to accelerate long outputs from AI models and that their speed comes from combining large amounts of compute, memory, and bandwidth on a single very large chip while removing bottlenecks that can slow inference on conventional hardware. OpenAI framed the partnership as a move to make its AI respond much faster, especially in interactive “request, think, respond” loops that happen when people use AI.

OpenAI said real-time responses can change how people use its tools, leading users to do more, stay longer, and run higher-value workloads. The company said it expects to expand this low-latency capacity across workloads as the integration progresses.

Where it will be used

OpenAI listed several common tasks where speed matters, including asking difficult questions, generating code, creating an image, and running an AI agent. It said the Cerebras capacity will be added as part of a broader “mix of compute solutions,” and the low-latency systems will be matched to the right workloads.

Cerebras also highlighted use cases such as coding agents and voice chat, saying large language models on Cerebras can deliver responses up to 15 times faster than GPU-based systems. Cerebras said this kind of speed can lead to higher engagement for consumers and open the door to new applications.

Timeline and scale

OpenAI said the partnership adds 750 megawatts of compute capacity, and the rollout will happen in phases. OpenAI also said the capacity will arrive in multiple tranches through 2028.

Cerebras said the deployment will roll out in multiple stages beginning in 2026 under a multi-year agreement. Cerebras presented the project as a major step toward making fast AI inference more widely available.

What the companies said

Sachin Katti of OpenAI said the company’s compute strategy is to build a resilient portfolio that matches systems to workloads, and that Cerebras adds a dedicated low-latency inference solution meant to support faster responses and more natural interactions. Andrew Feldman, co-founder and CEO of Cerebras, said the companies aim to bring leading AI models to what Cerebras calls the world’s fastest AI processor and compared the potential impact of real-time inference to how broadband changed the internet.

In its blog post, Cerebras said the partnership was “a decade in the making,” noting that the two organizations were founded around the same time and that their teams have met frequently since 2017 to share research and early work. Cerebras also said the release of ChatGPT shaped the direction of the AI industry and that the challenge now is extending AI’s benefits broadly, with speed described as a key driver of adoption.